There are some things about your website you simply can’t ignore — manual penalties, negative SEO, stuff like that. There are other problems that can come up, too. They aren’t quite as obvious, but they are just as damaging.

In this article, I share with you four of these data-driven alarm signals. I want you to know exactly what metrics to watch, how to find out if you’re in danger, and what you should do about it.

1. Loss of indexed pages

What it is

Google’s algorithm crawls your site and puts these pages into their vast index to be returned in search results for relevant queries. As a courtesy to you, they tell you in Google Search Console (GSC) exactly how many pages of your site are in their index.

If you are consistently adding new content and maintaining a valid sitemap.xml, this number should rise. Of course, if you intentionally remove certain pages, then the number will go down. However, if the number of indexed pages drops suddenly or declines gradually over time, then you have a problem on your hands.

Why would this happen? Google removes pages from its index for a variety of reasons. Sometimes, pages just drop off the index because of age or lack of visitors. This is normal. Other times, however, Google penalizes pages, i.e., deindexes them because of spam or unnatural backlinks.

When a page is removed from Google’s index, it will no longer be returned in search results. The fewer pages you have indexed, the less likely it is that your site will rank for certain keywords.

Where to get the data

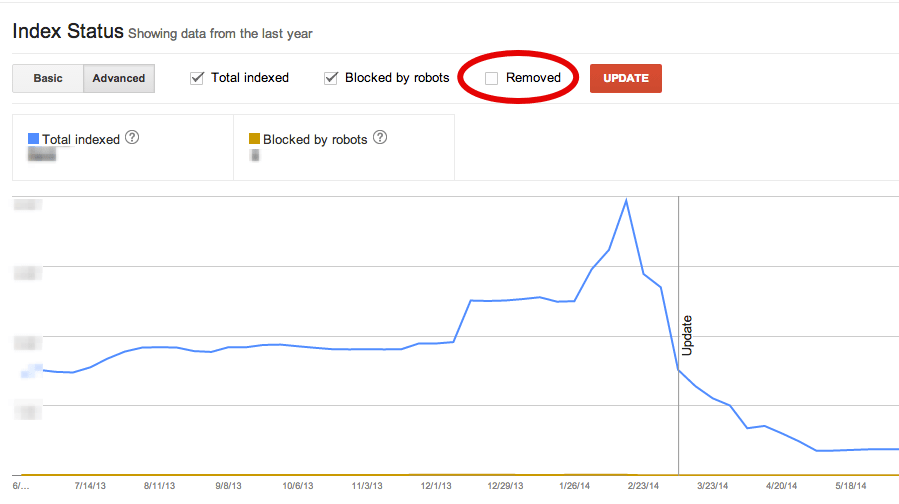

Go to Google Search Console → Google Index → Index Status. This will show you a graph of the total indexed pages over the past twelve months. Make sure that you’ve left the “removed” box unchecked.

The image below shows a site that experienced a severe loss of indexed pages. In this case, the indexation loss coincided with a manual penalty.

What to Do

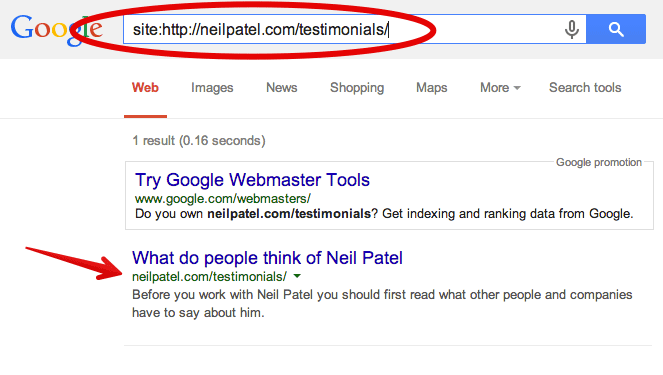

If possible, find out what pages might be deindexed. If you have a site with just a few pages, indexation loss could be a major blow to traffic. If the deindexed pages are landing pages, this is even worse. You can find out if a page has been deindexed by searching Google for “site:[your page URL].”

In this case, I checked to see if my page https://neilpatel.com/consulting/ was still indexed by Google. (Thankfully, it is.)

On large sites with tens of thousands of pages, it’s unrealistic to search every page for deindexing in this way. However, you can still fix the problem by conducting a backlink audit.

It’s a good time to audit your entire link profile for spammy links and remove them. I’ve often seen Google start to deindex pages and a month or two later hit the site with a manual penalty. You can thwart such total deindexation by conducting a thorough audit, link removal, and disavowal.

2. Duplicate content

What it is

Duplicate content — when your content is displayed on multiple on- or off-site locations — can erode your rankings and put you at risk for algorithmic penalization. Often, the fault of duplicate content is oversight in the development stage of a website (i.e., misuse of session IDs or URL parameters). Other times, duplicate content is a result of scrapers or content syndication without a rel=canonical tag.

Where to get the data

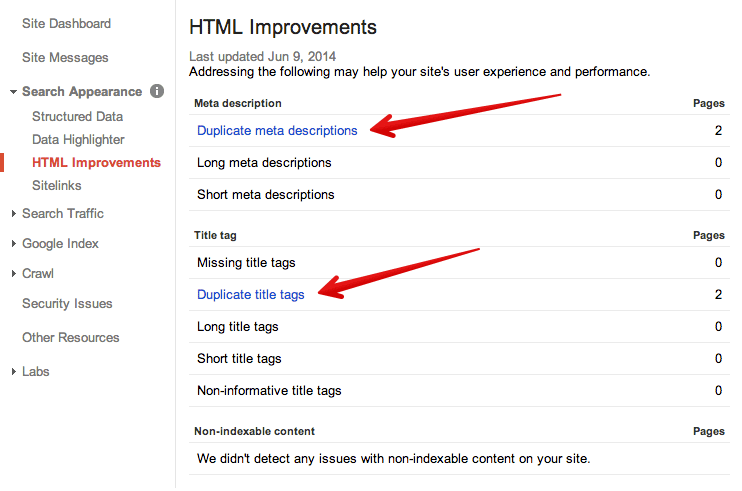

There are plenty of tools that can help you target and fix duplicate content. Screaming Frog is one of my favorites. However, you can access duplicate content information easily and accurately within GSC.

Go to Google Search Console → Search Appearance → HTML Improvements

The site below has just a few duplicate content issues. These probably aren’t enough to warrant any serious traffic loss or penalization.

What to do

The fix for duplicate content listed in GSC is very easy. Simply go to the pages that are listed as containing duplicate content, and change them.

3. Lost links

What it is

A great link profile is at the core of a great site. You simply can’t have a strong web presence unless you’ve got plenty of healthy links pointing back to your site.

But what if those links start disappearing? It can happen, and when it does, you will have a problem on your hands. Lost linkbacks mean lost ranking, lost traffic, and lost revenue.

Though spammy linkbuilding is a relic of a bygone SEO era, it is still important to work hard at creating high-quality backlinks. You do so through content marketing and careful guest blogging.

But links don’t last forever. According to Internet Live Stats, there are more than 1.5 billion websites on the world wide web today, and less than 200 million of those are active. The reality of the disappearing web — also known as “churn rate” — means that you will experience lost links.

Where to get the data

There are plenty of tools for analyzing your link data. I’ve probably used every one on the market — Majestic, Moz, LinkResearchTools, SEMrush, etc. I’ve also developed tools — Crazy Egg — that help you understand your metrics and visitor behavior.

You can get backlink counts for free from the Backlink Checker tool, or by using Google Search Console.

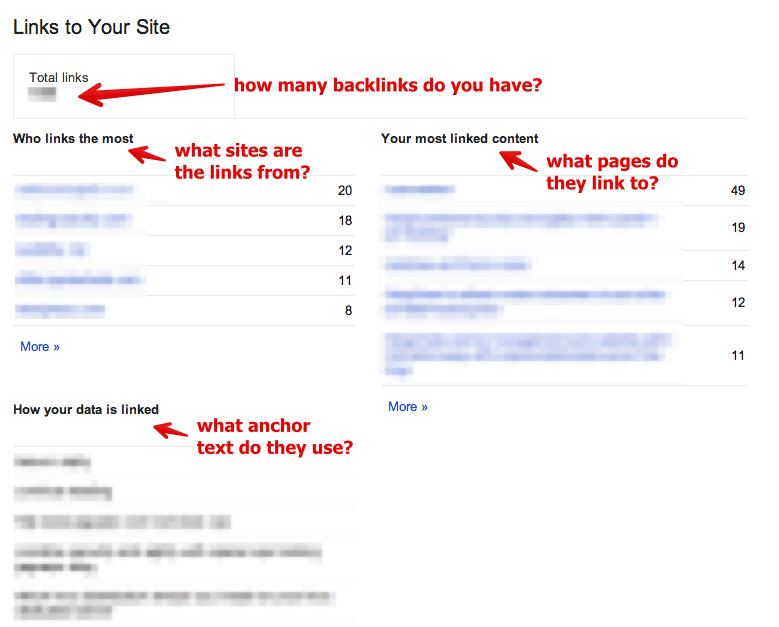

I recommend that you keep a close eye on this number and on this page in Google Search Console. Go to Search Traffic → Links to Your Site.

- Total links: If this number declines gradually or sharply, it indicates a loss of linkbacks and the potential for site decline. Remedial action: Content marketing improvements.

- Who links the most: Most of your links should be coming from other niche sites, blogs, industry websites, etc. If you see an alarming amount of off-niche, porn, gambling, or spam sites, you have a problem. Remedial action: Conduct a link profile audit.

- Your most linked content: Links should be pointing at your landing pages, content pages, or other significant pages. If you discover old, irrelevant, or undesirable pages being linked to, it’s a sign that you need to make some improvements in your content marketing. Remedial action: Content audit. Target the most linked to pages for improvement. Continue content marketing.

- How your data is linked: This is the anchor text used to create the links. If the anchors contain irrelevant, spammy, or over-optimized anchors, you could have a problem. Remedial action: Link profile audit.

4. Organic traffic decline

What it is

When your organic traffic goes down, you need to deal with the issue as soon as possible. Every site faces fluctuation, but if a fluctuation turns into a trend, it’s an alarm signal.

Where to get the data

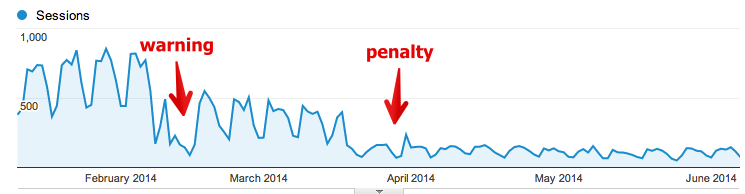

In Google Analytics, go to Acquisition → Keywords → Organic. It doesn’t matter that the specific results are not provided. What does matter is whether your organic keyword traffic is up or down. The graph below indicates a problem:

For this site, the “warning” point was the first symptom that the site was experiencing a problem. Their organic keyword traffic customarily experiences peaks and troughs, but there was a sudden dip that was lower than usual. This was a warning sign. The next peak did not rebound to previous traffic average levels. Then, one month later, they received a manual penalty.

Manual penalties do not always come with warning signs like this one. Regardless, the change in keyword traffic usually indicates some level of problem — be it a spammy link profile, deindexed pages, etc.

What to do

Just as the causes for organic traffic loss are legion, the treatments are many. First, you need to find out exactly why traffic declined. Ask questions, and get data answers:

- What changes happened in the backlink profile?

- What other traffic sources changed?

- Was there an algorithmic change?

Ask as many questions as you can until you’re able to form a hypothesis — a possible reason for the traffic decline.

Some of the best big-picture solutions are also some of the most basic — reevaluating keywords, improving content marketing, removing toxic backlinks, etc. There aren’t any stock answers to solve this problem. It must be solved on a case-by-case basis.

Conclusion

The SEO’s job is not merely to optimize title tags and create keyword-rich content. The SEO’s job is to study the numbers, to identify problems, and to develop strategic solutions. If you take a look at each of the data points I’ve described in this article, you may be able to prevent some major disasters or simply improve your SEO.

What other data-driven alarm signals should the SEO watch?

Comments (12)