12 months. That’s how long many conversion rate optimization (CRO) professionals say you need to wait to see results and/or make the investment back.

Alternatively, you can do your own testing. And potentially waste even more time waiting for good results.

People are starting to believe conversion optimization doesn’t work at all. The promising case studies they’ve seen can be misleading, and many of the tests people do themselves never reach statistical significance.

But conversion optimization can be extremely profitable. Many-fold increases are possible with systematic and well-conducted conversion optimization.

Most importantly, you need to test the right things in the right order.

Statistics or Business?

“Change one thing at a time so you know exactly how each change affects conversion rates.”

Maybe you’ve heard that. It kind of makes sense, too.

But it’s hopelessly slow and ineffective. Yet, many “experts” say it’s the right way to do A/B testing.

Sure, if you’re writing a doctoral dissertation, you might want to stick to single changes to get scientifically accurate data. And to be fair, changing just one thing on a page is sometimes the right choice.

But assuming you’re after concrete business results—sales, prospects, leads, contacts—you should usually stay away from the “test one thing at a time” idea.

Instead, test the message.

Test the Message, Not the Words

Some back-story might be necessary here.

The landing pages with the highest conversion rates work so well because they focus on the right ideas; they make visitors understand why they should take action (convert).

To do that, you need to know what are those reasons that make your target customers convert. In other words, you need to know your value proposition.

Your value proposition is the collection of the best believable reasons your target customers have for taking the action you’re asking for.

The key thing when talking about A/B testing is collection of reasons; you should start by testing which of the ideas (in the collection) need to be the most prominent to get the highest conversion rate.

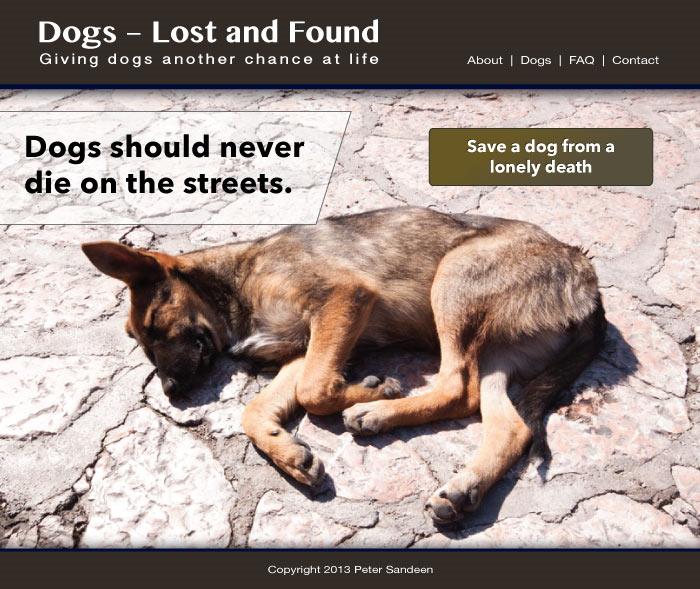

Here’s an example of a (fictional) dog shelter’s home page:

The message of the page—the idea of the value proposition the page focuses on—is it’s unfair that some dogs don’t have a chance to be happy and have to live in cages, and you can help at least one of them.

Everything on the page focuses on that message.

- The tagline, “Giving dogs another chance at life”

- The main headline, “When can we get out?”

- The button text/call to action, “Give a dog another chance to be happy”

- The picture of caged cute puppies

If you test one thing at a time, the test version could look like this:

The variation (the headline) is too small to make a significant difference because it doesn’t change the message of the page; visitors don’t perceive anything to be different.

Instead of making tiny changes like that, your test should focus on the message of the page. Here’s what the test could look like:

This page would most likely create a significant difference in conversion rate because the message is so different: you can get a new family member and best friend.

You should test all the different messages (reasons) in your value proposition to find the one that works best.

Learn and Adjust Accordingly

After testing miniscule details, unorganized testing is the second most common reason for limited or non-existent results from A/B testing.

If you want to see great results, you can’t do conversion optimization haphazardly. Instead, you need to base decisions on what you’ve already learned.

For example, let’s say the original home page (in the previous example) had a higher conversion rate than any variation you came up with. What next?

When you start testing a page, you’re looking for the best message. When you know the best message, try exaggerating it.

In this case, maybe the page below would have an even higher conversion rate because it’s going one step further in getting the original message across (it’s unfair that some dogs don’t have a real chance at life).

I’d expect this page to create a lower conversion rate than the original because it’s so negative. But you never know before testing it.

Once you’re happy with the message and its intensity, it’s time to move into A/B testing “big details.”

Mixing and Refining the Messages

Until this point, you’ve made each page focus on just one message from your value proposition. That’s how you find out what should be the primary message.

Now you can test mixing in a secondary message. They’re ideas in your value proposition, but they’re not quite as persuasive as the primary message (as proven by the previous testing).

Continuing with our dog shelter example, let’s assume that the original page won all variations, and the variation focusing on family was the second best. Below is a page you could test next.

Most of the page is identical to the original, but one key element—the headline—is focused on the secondary message.

You should also try switching the button text (or other page elements) rather than the headline to find the ideal place(s) for the secondary message.

You can also try squeezing in a third message, and maybe a fourth. How many messages you should have on a page depends on the page’s purpose and length and the messages; you can’t communicate clearly several complicated ideas in just a few words.

But usually on short landing pages, the first two messages will make up the majority of the conversion rate, and additional messages just create confusion.

Effective Way to A/B Test Details

Finally, you can move on to testing details, which is what many people start with.

But even though you’re changing just details, A/B testing can still create significant results.

The key is visitors’ perception. For example, changing the wording of a headline as in a previous example won’t make a difference in your conversion rates unless it changes what visitors perceive.

So, you need to look at each page systematically and find the weaknesses. And then test different solutions for those issues.

For example, the home page for the dog shelter might need to address some common objections. Specifically, people might be worried that shelter dogs aren’t healthy.

So, create a test that tests if that assumption is right:

Let’s say this version wins the A/B test. Similarly to testing the main message, when you know the direction that seems to work better, you can try amplifying the idea.

The version below would test if people need even more reassurance about the dogs’ health. It also addresses the worry about how difficult it is to get a shelter dog.

As you long as you can find potential issues, you can create more tests and keep increasing the conversion rates.

Focus on Measurable Results

Sure it’s nice to know how exactly each element on a page affects conversion rates in relation to all other elements. But you’ll never really know that unless you create a massive multivariable test with hundreds of variations, which is hardly ever viable.

Rather, focus on getting real business results with A/B testing and conversion optimization.

Don’t shy away from them because you haven’t seen significant results yet. And if you’ve hired someone to do the testing for you, don’t let them just test minor details hoping for a freaky stroke of luck.

Start by getting clear about your value proposition—if you don’t know what are the best reasons people have for doing what you’re asking them to do, you don’t know what you should test and can’t hope to see great results.

Then test which aspects of it pack the biggest punch before moving to details.

You’ll see significant results with A/B testing in a greatly shorter time than before.

About the Author: Right now, Peter Sandeen is sailing with his wife and dogs while the weather is still warm (he lives in Finland). But you can download his 5-step system for finding the core of your value proposition quickly and landing page checklist to improve your conversion rates.

Comments (8)