You know all about search engine optimization — the importance of a well-structured site, relevant keywords, appropriate tagging, technical standards, and lots and lots of content. But chances are you don’t think a lot about Googlebot optimization.

Googlebot optimization isn’t the same thing as search engine optimization, because it goes a level deeper. Search engine optimization is focused more upon the process of optimizing for user’s queries. Googlebot optimization is focused upon how Google’s crawler accesses your site.

There’s a lot of overlap, of course. However, I want to make this important distinction, because there are foundational ways in which it can affect your site. A site’s crawlability is the important first step to ensuring its searchability.

What is Googlebot?

Googlebot is Google’s search bot that crawls the web and creates an index. It’s also known as a spider. The bot crawls every page it’s allowed access to, and adds it to the index where it can be accessed and returned by users’ search queries.

My animated infographic on How Google Works shows you how the spiders fetch the web and index the information.

The whole idea of how Googlebot crawls your site is crucial to understanding Googlebot optimization. Here are the basics:

- Googlebot spends more time crawling sites with significant pagerank. The amount of time that Googlebot gives to your site is called “crawl budget.” The greater a page’s authority, the more crawl budget it receives.

- Googlebot is always crawling your site. Google’s Googlebot article says this: “Googlebot shouldn’t access your site more than once every few seconds on average.” In other words, your site is always being crawled, provided your site is accurately accepting crawlers. There’s a lot of discussion in the SEO world about “crawl rate” and how to get Google to recrawl your site for optimal ranking. There is a terminology misunderstanding here, because Google’s “crawl rate” refers to the speed of Googlebot’s requests, not the recurrence of its site crawl. You can alter the crawl rate within Webmaster Tools (gear icon → Site Settings → Crawl rate). Googlebot consistently crawls your site, and the more freshness, backlinks, social mentions, etc., the more likely it is that your site will appear in search results. It’s important to note that Googlebot does not crawl every page on your site all the time. This is a good place to point out the importance of consistent content marketing — fresh, consistent content always gains the crawler’s attention, and improves the likelihood of top ranked pages.

- Googlebot first accesses a site’s robots.txt to find out the rules for crawling the site. Any pages that are disallowed will not be crawled or indexed.

- Googlebot uses the sitemap.xml to discover any and all areas of the site to be crawled and indexed. Because of the variation in how sites are built and organized, the crawler may not automatically crawl every page or section. Dynamic content, low-ranked pages, or vast content archives with little internal linking could benefit from an accurately-constructed Sitemap. Sitemaps are also beneficial for advising Google about the metadata behind categories like video, images, mobile, and news.

Six Principles for a Googlebot Optimized Site

Since Googlebot optimization comes a step before search engine optimization, it’s important that your site be as easily and accurately indexed as possible. I’m going to explain how to do this.

1. Don’t get too fancy.

My advice, “don’t get too fancy” is for this reason. Googlebot doesn’t crawl JavaScript, frames, DHTML, Flash, and Ajax content as well as good ol’ HTML.

Google hasn’t been forthcoming on how well or how much Googlebot parses JavaScript and Ajax. Since the jury is out — and opinions run the gamut — you’re probably best off not consigning most of your important site elements and/or content into Ajax/JavaScript.

I realize that Matt Cutts told us that we can open up Javascript to the crawler. But some evidence and Google Webmaster Guidelines still proffer this bit of advice:

If fancy features such as JavaScript, cookies, session IDs, frames, DHTML, or Flash keep you from seeing all of your site in a text browser, then search engine spiders may have trouble crawling your site.

I side with skepticism on the use of JavaScript. Conjecture what you wish, but basically, don’t get too fancy.

2. Do the right thing with your robots.txt.

Have you ever really thought about why you need a robots.txt? It’s standard best practice for SEO, but why?

One reason why a robots.txt is essential is because it serves as a directive to the all-important Googlebot. Googlebot will spend its crawl budget on any pages on your site. You need to tell the Googlebot where it should and shouldn’t expend crawl budget. If there are any pages or silos of your site that should not be crawled, please modify your robots.txt accordingly.

The less Googlebot is spending time on unnecessary sections of your site, the more it can crawl and return the more important sections of your site.

As a friendly bit of advice, please don’t block pages or sections of your site that should not be blocked. The Googlebot’s default mode is to crawl and index everything. The whole point of robots.txt is to tell Googlebot where it shouldn’t go. Let the crawler loose on whatever you want to be part of Google’s index.

3. Create fresh content.

Content that is crawled more frequently is more likely to gain more traffic. Although pagerank is probably the determinative factor in crawl frequency, it’s likely that the pagerank becomes less important when compared with freshness factor of similarly ranked pages.

It’s especially crucial for Googlebot optimization to get your low ranked pages crawled as often as possible. As AJ Kohn wrote, “You win if you get your low PageRank pages crawled more frequently than the competition.”

4. Optimize infinite scrolling pages.

If you utilize an infinite scrolling page, then you are not necessarily ruining your chance at Googlebot optimization. However, you need to ensure that your infinite scrolling pages comply with the stipulations provided by Google and explained in my article on the subject.

5. Use internal linking

Internal linking is, in essence, a map for Googlebot to follow as it crawls your site. The more integrated and tight-knit your internal linking structure, the better Googlebot will crawl your site.

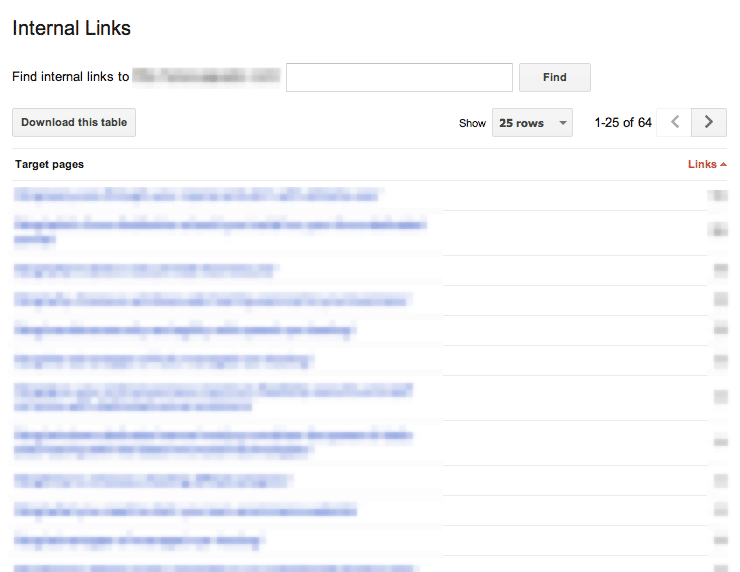

An accurate way to analyze your internal linking structure is to go to Google Search Console → Search Traffic → Internal Links. If the pages at the top of the list are strong content pages that you want to be returned in the SERPs, then you’re doing well. These top-linked pages should be your site’s most important pages:

6. Create a sitemap.xml

Your sitemap is one of the clearest messages to the Googlebot about how to access your site. Basically, a Sitemap does exactly what the name suggests — serves as a map to your site for the Googlebot to follow. Not every site can be crawled easily. Complicating factors may, for lack of a better word, “confuse” Googlebot or get it sidetracked as it crawls your site.

Sitemaps provide a necessary corrective to these missteps, and ensures that all the areas of your site that need to be crawled will be crawled.

Analyzing Googlebot’s Performance on Your Site

The great thing about Googlebot optimization is that you don’t have to play guesswork to see how your site is performing with the crawler. Google Webmaster Tools provides helpful information on the main features.

I want to advise you that this is a limited set of data, but it will alert you to any major problems or trends with your site’s crawl performance:

Log in to Webmaster Tools, and go to “Crawl” to check these diagnostics.

Crawl Errors

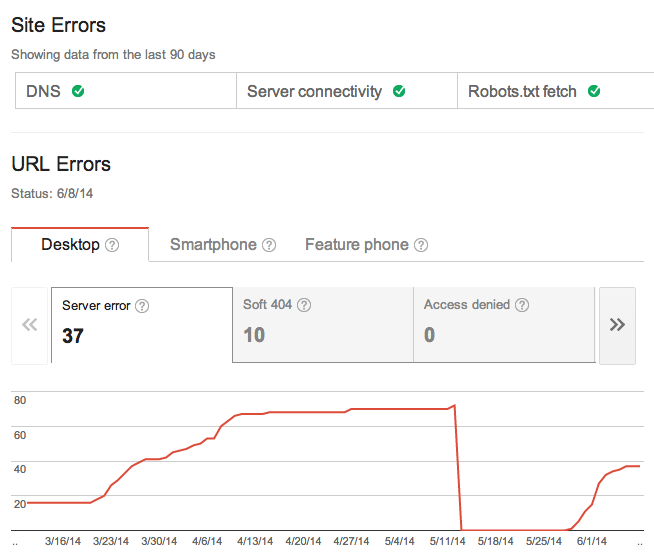

You can find out if your site is experiencing any problems with crawl status. As Googlebot routinely crawls the web, your site will either subject itself to crawling with no issues, or it will throw up some red flags, such as pages that the bot expected to be there based on the last index. Checking out crawl errors is your first step for Googlebot optimization.

Some sites have crawl errors, but the errors are so few or insignificant that they don’t automatically affect traffic or ranking. Over time however, such errors are usually correlated with traffic decline. Here is an example of a site that is experiencing errors:

Crawl Stats

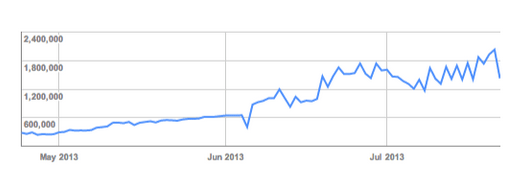

Google tells you how many pages and kbs the bot analyzes per day. A proactive content marketing campaign that regularly pushes fresh content will provide a positive upward momentum for these stats.

Fetch as Google

The “Fetch as Google” feature allows you to look at your site or individual pages the way that Google would.

Blocked URLs

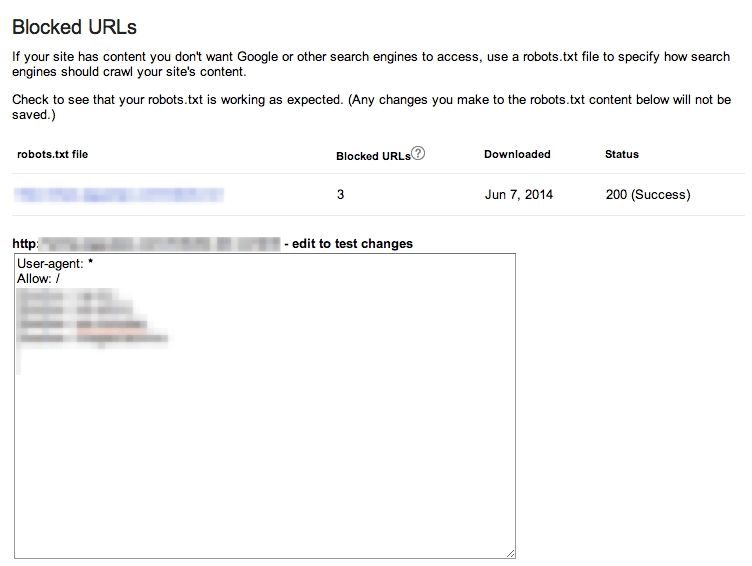

If you want to check and see if your robots.txt is working, then “Blocked URLs” will tell you what you need to know.

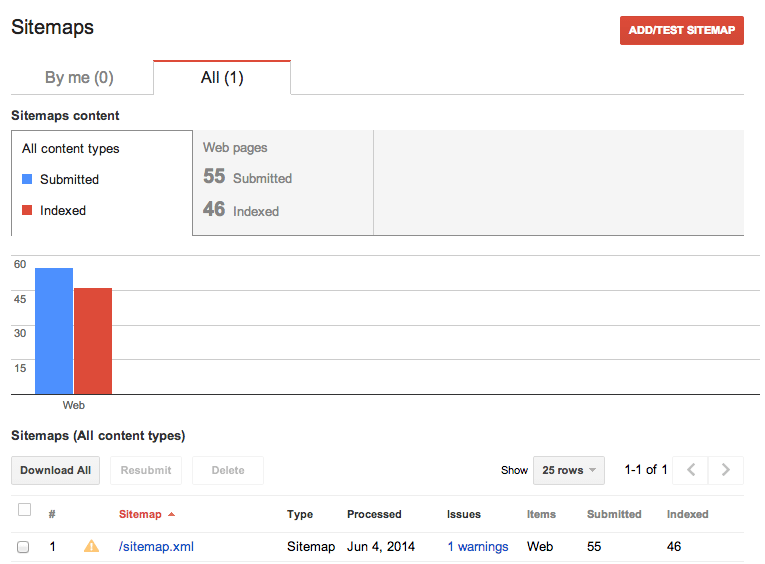

Sitemaps

Use the sitemap feature if you want to add a sitemap, test a sitemap, or discover what kind of content is being indexed in your sitemap.

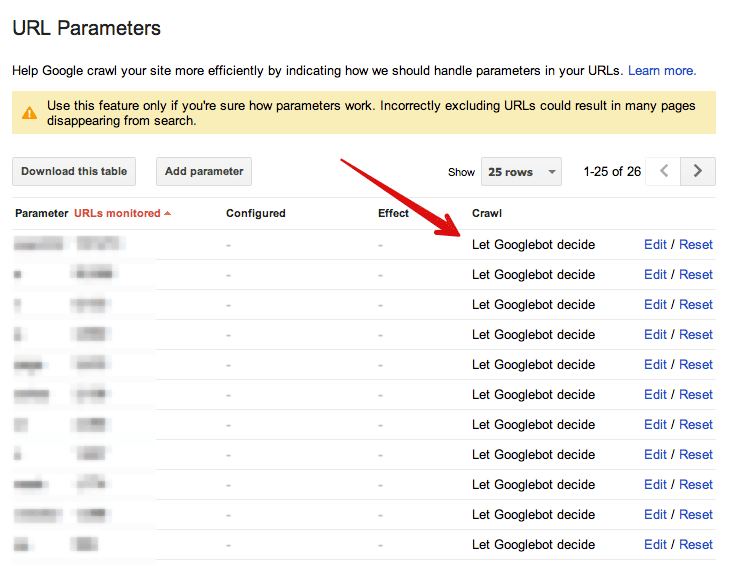

URL Parameters

Depending on the amount of duplicate content caused by dynamic URLs, you may have some issues on the URL parameter indices. The URL Parameters section allows you to configure the way that Google crawls and indexes your site with URL parameters. By default, all pages are crawled according to the way that Googlebot decides:

Conclusion

If you want to really streamline and improve your site’s performance and SEO, then you ought to be giving some time and effort to Googlebot optimization. Some webmasters do not realize the amount of traffic that they are neglecting, simply because they have not given due attention to Googlebot optimization.

In order to be indexed and returned in search engine results, a site must be crawled. Unless the site is accurately crawled, it simply will not be indexed or returned. Start with this article, optimize your site for Googlebot, and see how it changes your traffic for the better.

What techniques do you use for Googlebot optimization?

Comments (20)