I’m willing to bet you’ve come across a lot of opinions about how to do conversion optimization.

You’ve probably formed some of your own ideas, too.

Unfortunately, in our work testing the so-called “best practices” over the past five years, my testing team and I have disproven many common recommendations for how to maximize website conversions and revenue.

That’s what this post is about — to tell you about one of the most important distinctions between successful and mediocre conversion optimization testing programs. It’s about strategy.

There are two kinds of people doing conversion optimization: tacticians and strategists. Tacticians focus on following “best practices” and improving metrics like conversion rate. Conversion strategists, on the other hand, focus on building a repeatable strategic process that creates powerful hypotheses and insights in order to fulfill business goals.

The business results can be astounding.

For example, in today’s case study, Iron Mountain, the conversion optimization strategy led to a 45% lift in the first test, then a 404% boost (!), then another 44%, then an additional 38%, followed by a 49% conversion rate increase. And that was just on a few of the landing pages.

I’ll give you details on one of those A/B/n tests in a moment; but first, let’s review conversion optimization at a high level.

A Quick Primer on Conversion Optimization

Your website has two kinds of conversions:

- On-page actions (a type of micro-conversion)

- Revenue-driving conversions (the ones that support your business goals).

On-page actions are things like add-to-cart’s and form submissions. Revenue-driving conversions are things like e-commerce sales and quote-request leads for your sales team.

For both types of conversions, your conversion rate hinges on six factors:

- Value proposition – This is the sum of all the costs and benefits of taking action. What is the overall perceived benefit in your customer’s mind? Those perceived costs and benefits make up your value proposition.

- Relevance – How closely does the content on your page match what your visitors are expecting to see? How closely does your value proposition match their needs?

- Clarity – How clear is your value proposition, main message, and call-to-action?

- Anxiety – Are there elements on your page (or missing from your page) that create uncertainty in your customer’s mind?

- Distraction – What is the first thing you see on the page? Does it help or hurt your main purpose? What does the page offer that is conflicting or off-target?

- Urgency – Why should your visitors take action now? What incentives, offers, tone, and presentation will move them to action immediately?

These are the six elements that both tacticians and strategists should understand to be able to make conversion rate and revenue improvements.

The main difference between tacticians and strategists is how they plan and interpret their tests.

Where Tacticians Shine

Conversion tacticians shine in the places where details are happening. They think about button color and size. These are the people who have arguments over whether a Big Orange Button (I call him BOB) will solve the problem. Tacticians have a tool kit that includes a wide array of tested elements that can be applied to a problem quickly.

Tacticians are great for getting started with testing, but they rapidly hit a limit on the benefit they achieve from tweaking elements, rather than enhancing customer satisfaction and business goals.

Tactical conversion optimizers rarely create hypotheses that describe customer behavior. They are focused on elemental, on-page concerns like form fields, pop-up windows, or maximizing your testing tool’s capabilities. Elements like these are the quick and easy areas to attack first, but they rarely result in big gains for your business, and they don’t generate marketing insights that lead to the next great hypothesis.

Why Strategy Is Better

A strategic approach aims for more fundamental and ongoing improvements. They aim for a mix of big wins and incremental improvements that give marketing insights.

Conversion strategists know three important things:

- The only conversion rates that matter are relative.

- Conversion rate improvement is a means to an end—and that end is profit.

- Learning from hypotheses is more important than winning with every test.

That is why good strategists create documentation about why they are running tests and what customer needs they are trying to address. Making hypothesis-based testing part of your organizational culture is far more important than making a button the right color (hint: there is no right color). Strategists know that an ongoing, structured process of continuous learning and improvement delivers the best results over time.

The strategist knows from experience to look for meaning behind the numbers rather than simply taking test results at face value.

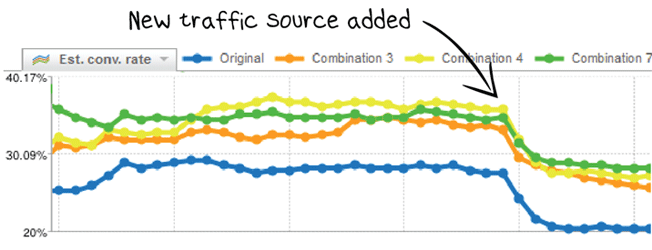

Take a look at this test result report:

At the point when a new traffic source was added to the test page, the conversion rates for all of the test variations dropped.

A tactician would see this conversion rate trend and say, “Oh no! Our conversion rate plummeted. We have to fix this!” They would run to the design department to order new buttons and images or turn off the new source of traffic.

A conversion strategist, however, would look deeper at sales and profit numbers to find out about order volume and order value. They might create a hypothesis for whether there’s a different customer need specific to the new traffic source and plan a new round of testing based on that hypothesis. The strategist may discover that our green combination is the best for the new traffic source, even though it isn’t the winner for the other traffic sources.

There could be many other insights and hypotheses the strategist would gain from the results analysis, too.

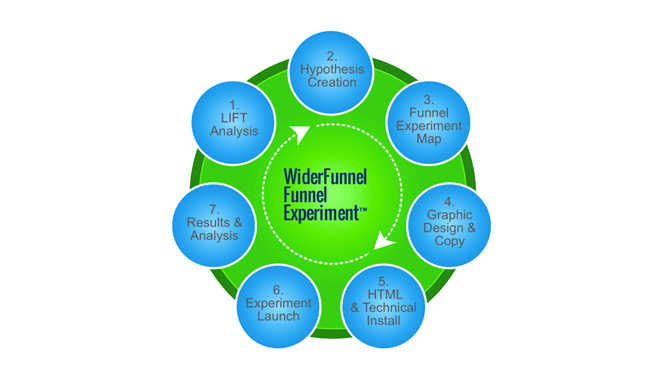

That’s why, in my 7-step testing process, the 7th step is often the most important. That’s where the strategist solidifies the insights that can be fed into the next tests.

A Conversion Optimization Strategy Looks at the Big Picture

With a conversion optimization strategy, every test leads to insights that lead to more hypotheses to test. The learning from each test leads to greater lift in the following tests.

Let’s look at the Iron Mountain example again. For the past three years, my team has been testing and optimizing the conversion rates for Iron Mountain’s most important marketing touch points. In that time, we’ve run many A/B/n tests on various areas of the website, including landing pages and site-wide elements on ironmountain.com.

This partnership had unique challenges that emphasized the need for a strategy:

- How do you implement a conversion optimization strategy for a website that has over 17,000 pages, plus landing pages and campaign microsites?

- How do you make sure your website experiences are maximizing conversion rates and that the learning is applied across the organization?

Your conversion optimization system needs good planning and great execution. Individual tests should feed learning back into an evolving understanding of your customers.

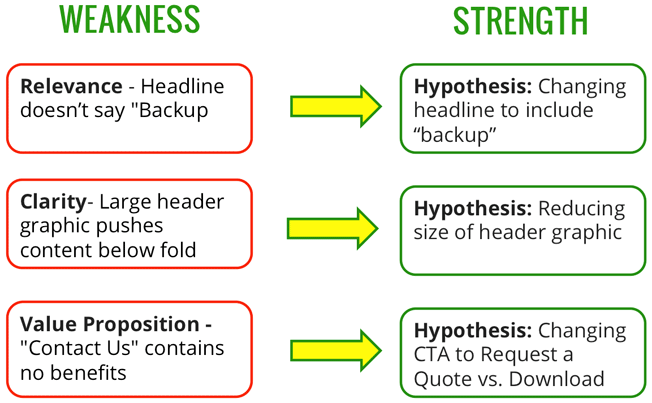

Here’s an example of one of the landing page analyses we performed as part of a testing strategy for Iron Mountain:

Notice that a strategist is not looking to specific tactical changes when planning for the test. Each one of the called out points are conversion problems that can be turned into a hypothesis. Overall, there are also themes that emerge. Readability and distraction seem to be the major issues to address. This analysis contains 10 points of action that can be tested.

There is a rich pool of possible changes for each point of action. Any test that is performed in this structure, even the losing tests (because not every test will win), can offer marketing insight that can be taken to every portion of your site and business.

Strategy Turns Weakness into Strength

Take the worst thing on your page and hypothesize its opposite. This doesn’t mean changing everything that is orange to blue. It means making everything that is vague, clear. It means making everything that is distracting or frightening disappear.

The Results of Strategy: Big Wins plus Insights

This winning page design for this particular test gave Iron Mountain a 404% lead generation conversion rate lift!

I won’t promise that every test gives results like that. And they don’t have to.

This result was built on the backs of previous tests because of the strategic approach we took.

Plus, the learning from this test gave insights that led to wins in other areas. The marketing team learned the type of offers that work best. That’s an insight that continues to pay dividends over and over again.

Strategy Wins Because It Offers New Ideas

eConsultancy reports that companies which take a structured approach in their conversion optimization are 2x as likely to report large increases in sales than those that don’t. Conversion strategy leads to structure and a continuous stream of new ideas, while conversion tactics alone lead to guessing and a constant hunger for more advice.

Conversion tactics by themselves are dead-end streets. If you’re overly attached to one technique or answer to a problem, you will always find the place where they fail. When tactical tests fail, they are simply over. When strategic hypothesis-based tests fail, you get insights that can be taken to a new understanding of your conversion problems.

Because conversion strategy puts emphasis on customer needs and creating a vision for solving problems related to the business action, not the page action, it leaves fewer moments where you have to ask “What’s next?” This means that if you keep testing, you keep getting new insights. With new insights, you get new ideas. And then you have a continuous cycle of improvement.

Within a strategic framework, each time you start a new round of hypotheses, you will find new success for your business.

About the Author: Chris Goward is Co-Founder and CEO of WiderFunnel, the conversion optimization agency, and author of “You Should Test That!” He developed conversion optimization strategies for clients like Electronic Arts, Google, SAP, Shutterfly, and Salesforce.com.

Comments (16)